Back to courseware

Back to courseware

[Use arrow keys to navigate, "s" to show speaker notes, and "f" for fullscreen.]

The journey of data analysis often needs to start with hindsight - taking stock of data from past events and searching for an explanatory hypothesis.

In analyzing that data, you will uncover insights as to what patterns exist in the data, and what variables contribute to outcomes.

From those insights, you can develop foresight - the ability to predict how certain actions or external factors will impact future outcomes.

Related to the notion of Hindsight, Insight, and Foresight, you can classify types of analysis in four main categories:

Analyze what is happening now and in the past by characterizing data and uncovering patterns

Determine what happened and why, determine the causal and correlative relationships between variables and outcomes

Forecast future events based on extrapolating from past data

Determine next steps, recommend actions to take to achieve a specific outcome

Data Governance is the practice of ensuring the usability, quality, security, and availability of data within an organization.

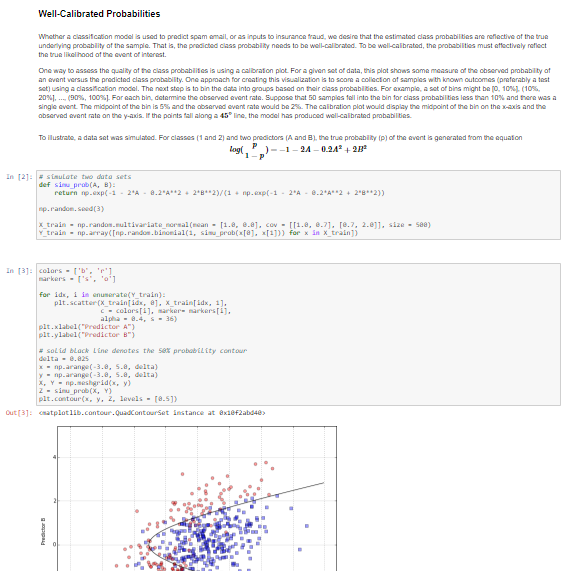

"The Jupyter Notebook is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations and explanatory text. Uses include: data cleaning and transformation, numerical simulation, statistical modeling, machine learning and much more."� --Project Jupyter

Example: Applied Predictive Modeling with Python (next slide)

“It’s the context around the data that provides value and that’s what will make people listen and engage.” --James Richardson, Senior Director Analyst, Gartner

There are so many choices of how to represent data, how can you choose?

The vega community has a fantastic breakdown with examples and when to use each: https://goo.gl/wpeXnW